Point Policy: Unifying Observations and Actions with Key Points for Robot Manipulation

Abstract

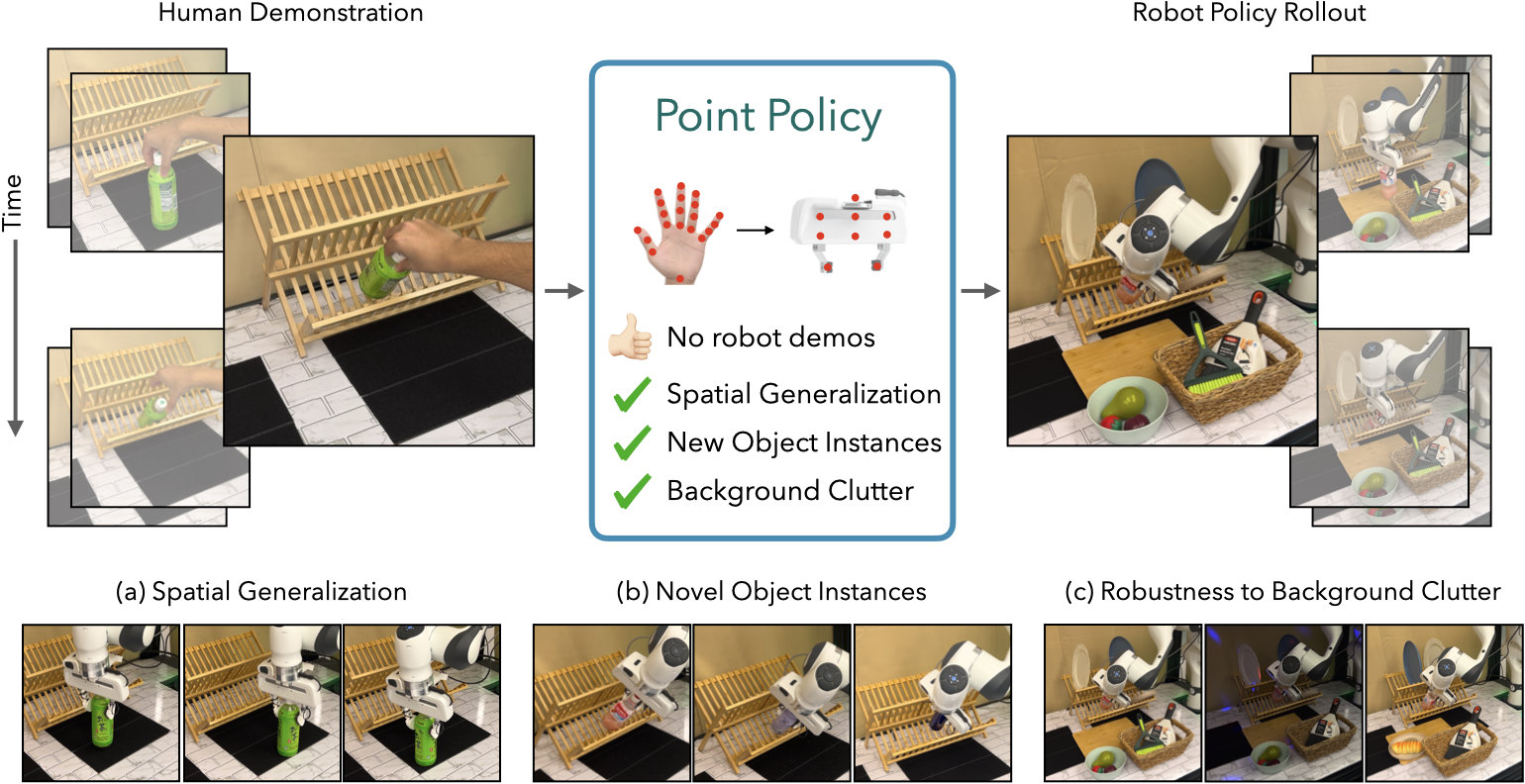

Building robotic agents capable of operating across diverse environments and object types remains a significant challenge, often requiring extensive data collection. This is particularly restrictive in robotics, where each data point must be physically executed in the real world. Consequently, there is a critical need for alternative data sources for robotics and frameworks that enable learning from such data. In this work, we present Point Policy, a new method for learning robot policies exclusively from offline human demonstration videos and without any teleoperation data. Point Policy leverages state-of-the-art vision models and policy architectures to translate human hand poses into robot poses while capturing object states through semantically meaningful key points. This approach yields a morphology-agnostic representation that facilitates effective policy learning. Our experiments on 8 real-world tasks demonstrate an overall 75% absolute improvement over prior works when evaluated in identical settings as training. Further, Point Policy exhibits a 74% gain across tasks for novel object instances and is robust to significant background clutter.

Proposed Framework

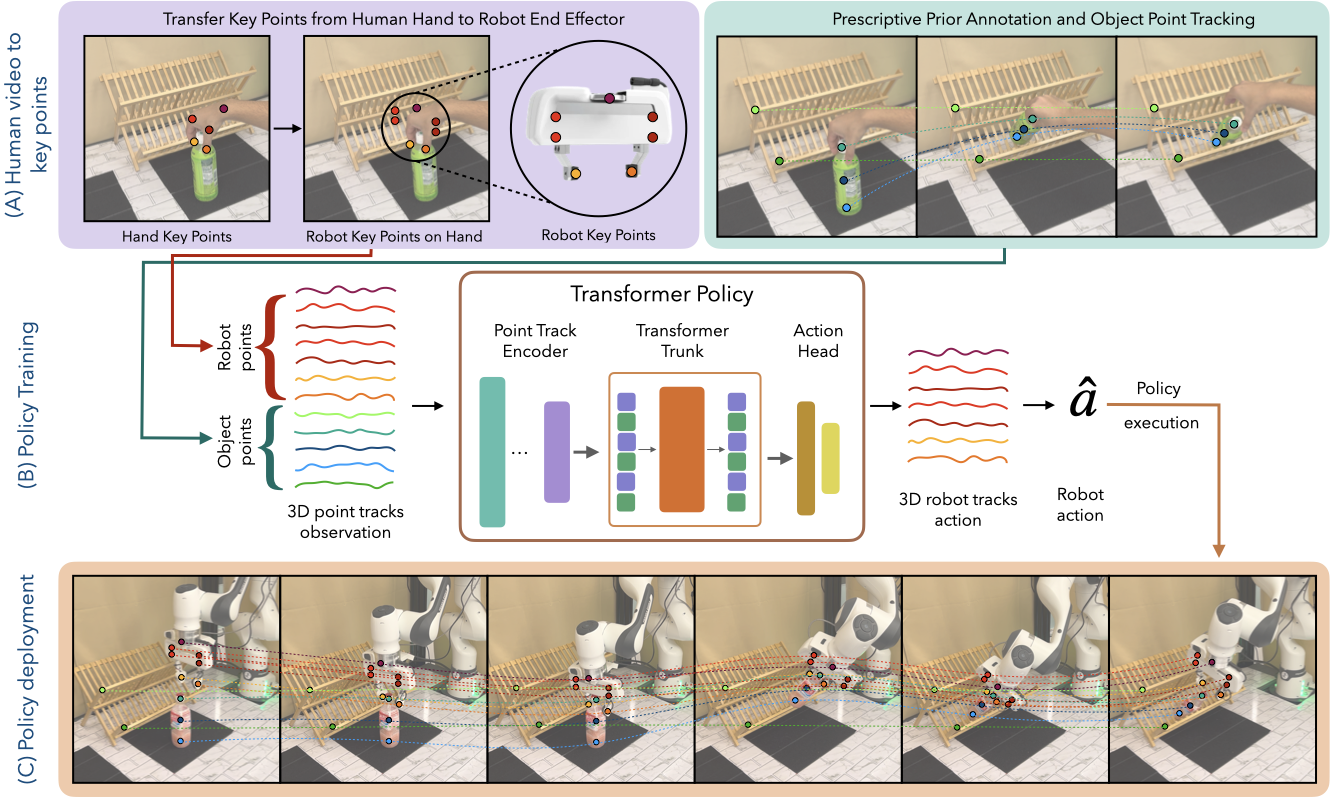

Point Policy leverages state-of-the-art vision models and policy architectures to translate human hand poses into robot poses while capturing object states through sparse single-frame human annotations. The derived key points are fed into a transformer policy to predict the 3D future point tracks from which the robot actions are computed through rigid-body geometry constraints. Finally, the computed action is executed on the robot using end-effector position control at a 6Hz frequency.

In-domain Performance

We evaluate Point Policy on 8 real world tasks on a Franka Research 3 robot. Here, we present the results of evaluations conducted in an in-domain setting with significant spatial variations. Point Policy achieves an average success rate of 88% across all tasks, outperforming the strongest baseline by 75%. We provide videos of successful rollouts below (all played at 2X speed).Task: Trajectory:

Performance on Novel Object Instances

We evaluate Point Policy on novel object instances unseen in the training data on 6 tasks. We observe that point policy achieves an average success rate of 74% across all tasks, outperforming the strongest baseline by 73%. We provide videos of successful rollouts below (all played at 2X speed).Performance with Background Distractors

We evaluate Point Policy in the presence of background clutter on 3 tasks on both in-domain and novel object instances. We observe that Point Policy is robust to background clutter, exhibiting either comparable performance or only minimal degradation in the presence of background distractors. We provide videos of successful rollouts below (all played at 2X speed).Task: Trajectory:

Extreme Examples

Here we include rollouts of Point Policy operating under heavy scene variations on both in-domain and novel object instances. We observe that despite the significant visual variations in the scene, Point Policy succeeds at completing the tasks. This robustness can be attributed to Point Policy’s use of point-based representations, which are decoupled from raw pixel values. By focusing on semantically meaningful points rather than image-level features, Point Policy enables policies that are resilient to environmental perturbations. All videos are played at 2X speed.Failure Examples

Below we provides examples of failures of Point Policy on in-domain as well as novel object instances. All videos are played at 2X speed.In-domain Objects

Novel Object Instances

Bibtex

@article{haldar2025point,

title={Point Policy: Unifying Observations and Actions with Key Points for Robot Manipulation},

author={Haldar, Siddhant and Pinto, Lerrel},

journal={arXiv preprint arXiv:2502.20391},

year={2025}

}